In the realm of large language models and chatbots, response streaming has emerged as a popular feature, offering the ability to load output token by token or word by word. Enabling users to read the results from the chatbot as it is processing. For instance when you enter a prompt into ChatGPT you will see the words start appearing as ChatGPT starts streaming its response to you via its web interface.

This is particularly beneficial for large text generation tasks, where the volume of data can be overwhelming. This article will delve into the intricacies of implementing streaming with LangChain for large language models and chatbots, with a focus on using OpenAI’s ChatGPT-3.5-turbo model via LangChain’s ChatOpenAI object.

Streaming, in its simplest form, is a process that allows data to be processed as a steady and continuous stream. This is in contrast to traditional methods of data processing, where all data must be loaded into memory before it can be processed. The benefits of streaming are manifold, including improved efficiency, reduced memory usage, and the ability to process large volumes of data in real time.

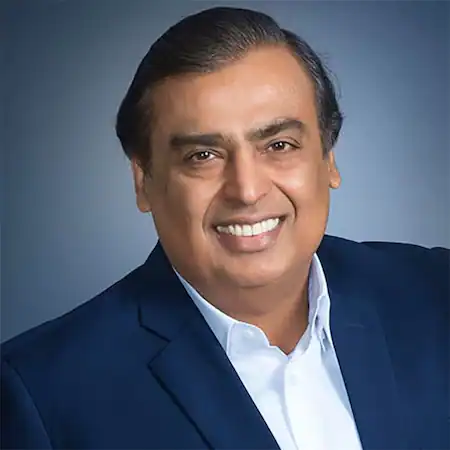

ChatGPT response streaming

Implementing response streaming can be straightforward for basic use cases. However, the complexity increases when integrating LangChain and agents, or when streaming data from an agent to an API. This is due to the additional layer of logic that an agent adds around the language model.

In LangChain, streaming can be enabled by using two parameters when initializing the language model: ‘streaming’ and ‘callbacks’. The ‘streaming’ parameter activates streaming, while the ‘callbacks’ parameter manages the streaming process. The streaming process can be monitored by observing the output of each newly generated token. Watch the excellent video below created by James Briggs who provides a fantastic introduction and starting point to help you deploying it in production in no time using FastAPI.

Other articles you may find of interest on the subject of ChatGPT :

When using an agent in LangChain, the agent returns the output from the language model in a JSON format. This output can be used to extract tools or final answers. LangChain has a built-in callback handler for outputting the final answer from an agent. However, for more flexibility, a custom callback handler can also be used.

The custom callback handler can be set to start streaming once the final answer section is reached. This provides a more granular control over the streaming process, allowing developers to tailor the streaming output to their specific needs.

To implement streaming with an API, a streaming response object is needed. This requires running the agent logic and the loop for passing tokens through the API concurrently. This can be achieved by using async functions and creating a generator that runs the agent logic in the background while the tokens are being passed through the API.

The custom callback handler can be modified to return only the desired part of the agent’s output when streaming. This allows for a more targeted streaming output, reducing the amount of unnecessary data that is streamed.

However, implementing streaming with LangChain and agents is not without its challenges. Additional testing and logic may be needed to handle cases where the agent does not generate the expected output. This can involve creating custom error handling logic, or implementing additional checks to ensure the output is as expected.

Implementing response streaming with LangChain for large language models and chatbots is a complex but rewarding process. It offers numerous benefits, including improved efficiency, reduced memory usage, and the ability to process large volumes of data in real time. However, it also presents challenges, particularly when integrating LangChain and agents, or when streaming data from an agent to an API. With careful planning and testing, these challenges can be overcome, resulting in a robust and efficient streaming implementation.

Filed Under: Guides, Top News

Latest aboutworldnews Deals

Disclosure: Some of our articles include affiliate links. If you buy something through one of these links, aboutworldnews may earn an affiliate commission. Learn about our Disclosure Policy.