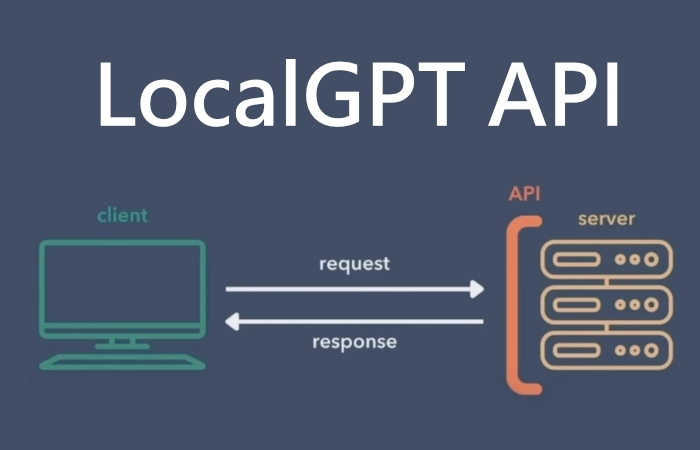

If you would prefer to not share your information or data with OpenAI or other similar AI providers. You might be interested in this tutorial providing an overview of how you can use the LocalGPT API to create your own personal AI assistant.

LocalGPT is a powerful tool for anyone looking to run a GPT-like model locally, allowing for privacy, customization, and offline use. It provides a way to ask questions to specific documents or datasets, find answers from those documents, and perform these actions without relying on an internet connection or external servers and is available via GitHub

What is LocalGPT and how does it work

- Inspiration: It is inspired by another project referred to as “privateGPT.” It seems to follow a similar concept but has its unique features.

- Model Replacement: LocalGPT replaces the GPT4ALL model with the Vicuna-7B model and uses InstructorEmbeddings instead of LlamaEmbeddings.

- Private and Local Execution: The project is designed to run entirely on a user’s local machine, ensuring privacy as no data leaves the execution environment. This allows for the ingestion of documents and question-asking without an internet connection.

- Ingesting Documents: Users can ingest various types of documents (.txt, .pdf, .csv, .xlsx) into a local vector store. The documents are then used to create embeddings and provide context for the model’s responses.

- Question Answering: Users can ask questions to their documents, and the model will respond using the local data that has been ingested. The responses are generated by a local Language Model (LLM), like Vicuna-7B.

- Built with Various Technologies: LocalGPT is constructed using tools and technologies like LangChain, Vicuna-7B, InstructorEmbeddings, and Chroma vector store.

- Environment and Compatibility: It provides instructions for setting up the environment through Conda or Docker, and it supports running on both CPU and GPU. Specific instructions are also provided for compatibility with different systems like Nvidia GPUs, Apple Silicon (M1/M2), etc.

- UI and API Support: The project includes the ability to run a local UI and API for interacting with the model.

- Flexibility: It offers options to change the underlying LLM model and provides guidance on how to choose different models available from HuggingFace.

- System Requirements: LocalGPT requires Python 3.10 or later and may need a C++ compiler, depending on the system.

LocalGPT is not just about privacy; it’s also about accessibility. By placing the LocalGPT instance in the cloud on a powerful machine, API calls can be made to build applications. This method allows clients to run applications without needing a powerful GPU, offering scalability across multiple computers or machines.

Build your own private personal AI assistant

Other articles you may be interested in on artificial intelligence:

The process involves a series of steps, including cloning the repo, creating a virtual environment, installing required packages, defining the model in the constant.py file, and running the API and GUI. It’s designed to be user-friendly, and a step-by-step guide is available through a video tutorial kindly created by Prompt Engineering, ensuring that even those new to AI can take advantage of this technology.

LocalGPT applications

LocalGPT is not just a standalone tool; it’s a platform that can be used to build applications. The API runs on a localhost address with Port 5110, and a separate UI can be run to interact with the LocalGPT API. This UI allows users to upload documents, add them to a knowledge base, and ask questions about those documents, demonstrating how the LocalGPT API can be utilized to create sophisticated applications.

Furthermore, the LocalGPT API can be served in the cloud, allowing local UIs to make calls to the API. This opens up endless possibilities for developing private, secure, and scalable AI-driven solutions.

One of the strengths of LocalGPT is its flexibility. The model definition has been moved to constant.py, allowing users to define the model they want to use within this file. The code walkthrough, available in the video tutorial, explains how the code works, including how files are stored, how the vector DB is created, and how the API functions are controlled. This level of insight and customization puts the power in the hands of the user, enabling tailored solutions that fit specific needs.

Artificial intelligence

LocalGPT represents a significant advancement in the field of AI, offering a pathway to private, localized AI interactions without the need for specialized hardware. The video tutorial provides a comprehensive guide on how to set up the local GPT API on your system and run an example application built on top of it, making it accessible to a wide range of users.

Whether you’re an individual looking to explore AI, a business seeking to integrate AI without compromising on privacy, or a developer aiming to build innovative applications, LocalGPT offers a promising solution. Its combination of privacy, flexibility, and ease of use paves the way for a new era of personalized AI assistance, all within the confines of your local environment or cloud instance. The future of AI is here, and it starts with LocalGPT.

Filed Under: Guides, Top News

Latest Aboutworldnews Deals

Disclosure: Some of our articles include affiliate links. If you buy something through one of these links, Aboutworldnews may earn an affiliate commission. Learn about our Disclosure Policy.