Generative AI, a term often associated with generating images, music, speech, code, video, or text, has seen significant evolution over the past decade. The recent advancements, particularly the introduction of foundation models like BERT, GPT, T5, and DALL-E, showcase the immense potential of AI in generating intricate essays or complex images based on concise prompts.

Generative AI foundation models are large-scale models trained on vast amounts of data to perform a variety of tasks. They serve as the “foundation” upon which specific applications or “tasks” can be fine-tuned or adapted. At their core, generative models aim to produce new data samples that echo the traits of their input data. Foundation models, on the other hand, are pre-trained on colossal datasets, often capturing a significant chunk of the internet. Their strength lies in the broad knowledge they amass, which is later tailored to specific tasks or domains.

The difference between generative and foundation models

For instance, Large Language Models (LLMs) such as BERT, GPT, and T5, which are trained on vast text data, streamline the processing and generation of natural language text for a myriad of tasks. The latest neural network architecture driving this innovation is “transformers,” which has been pivotal in the rapid evolution of generative AI. Here’s a brief overview:

- Generative Models: At their core, generative models aim to generate new data samples that are similar to the input data. For instance, Generative Adversarial Networks (GANs) are a type of generative model where two networks (a generator and a discriminator) are trained in tandem to produce new, synthetic instances of data.

- Foundation Models: These are pre-trained models on massive datasets, often encompassing a significant portion of the internet or other large corpora. The idea is to capture broad knowledge from these datasets and then fine-tune the model on specific tasks or domains.

- Examples include BERT, GPT (like me), RoBERTa, and T5. These models are primarily used in the natural language processing domain but can be applied to other domains as well.

Generative AI foundation models

- Advantages:

- Transfer Learning: Once a foundation model is trained, it can be fine-tuned on a smaller, specific dataset for various tasks, saving time and computational resources.

- Broad Knowledge: These models capture a wide range of information, making them versatile for multiple applications.

- Performance: In many cases, foundation models have set new benchmarks in various AI tasks.

- Challenges and Criticisms:

- Bias and Fairness: Foundation models can inadvertently learn and perpetuate biases present in their training data. This is a significant concern in terms of fairness and ethical considerations.

- Environmental Concerns: Training such large models requires substantial computational resources, leading to concerns about the environmental impact of their carbon footprint.

- Economic Impacts: As these models become more integrated into various sectors, there are concerns about their impact on jobs and economic structures.

- Applications:

- Natural Language Processing: Tasks like translation, summarization, and question-answering benefit from foundation models.

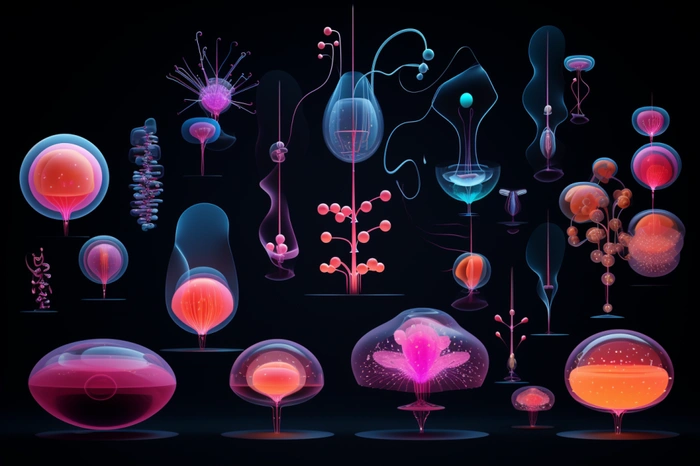

- Vision: Image classification, object detection, and even art generation.

- Multimodal: Combining vision and language, such as in image captioning or visual question answering.

Given the capabilities of these models, it’s unsurprising that the global generative AI market is poised to achieve a valuation of a staggering $8 billion by 2030, with an impressive estimated CAGR of 34.6% according to Kornferry and IBM. Their integration across consumer and enterprise sectors, as exemplified by OpenAI’s ChatGPT launched in November 2022, underscores the transformative nature of generative AI.

Generative AI is not just about creating; it’s about augmenting. It can bolster employee efforts, lead to significant leaps in productivity, and accelerate the AI development lifecycle. This acceleration means businesses can channel their resources to fine-tuning models for their unique needs. IBM Consulting’s findings emphasize this, noting up to a 70% reduction in time to value for NLP tasks like summarizing call center transcripts or analyzing customer reviews.

Artificial intelligence

Moreover, by slashing labeling requirements, foundation models make it considerably easier for businesses to experiment with AI, construct efficient AI-fueled automations, and deploy AI in mission-critical scenarios.

While the benefits are manifold, so are the challenges. Businesses and governments are already acknowledging the implications of this technology, with some placing restrictions on tools like ChatGPT. Enterprises need to grapple with issues concerning cost, effort, data privacy, intellectual property, and security when assimilating and deploying these models.

Furthermore, as with any technology that wields such power, there are ethical considerations. Foundation models can inadvertently perpetuate biases present in their training data, making fairness and ethical deployment paramount.

With companies aiming to seamlessly integrate the capabilities of foundation models into every enterprise via a frictionless hybrid-cloud environment, the future looks promising. However, as we steer into this future, it’s crucial for businesses and policymakers alike to move with caution, ensuring that the deployment of these powerful models is both responsible and beneficial for all.

In essence, generative AI foundation models have reshaped the landscape of AI research and applications due to their versatility and performance. However, their rise also brings forth a set of ethical, environmental, and economic challenges that the community must address.

Filed Under: Guides, Top News

Latest Aboutworldnews Deals

Disclosure: Some of our articles include affiliate links. If you buy something through one of these links, Aboutworldnews may earn an affiliate commission. Learn about our Disclosure Policy.